Ruthlessly Helpful

Stephen Ritchie's offerings of ruthlessly helpful software engineering practices.

Tag Archives: business

Platform Engineering

Posted by on November 14, 2025

Platform Engineering: The Strategic Practice Your Team Actually Needs

In previous posts, I discussed the concept of “ruthlessly helpful” practices, which are those that are practical, valuable, widely accepted, and provide clear archetypes to follow. Today, I want to apply this framework to one of the most significant developments in software engineering that have happened since I wrote “Pro .NET Best Practices”: platform engineering.

If you’re unfamiliar with the term, platform engineering is the practice of building and maintaining internal developer platforms that provide self-service capabilities and reduce cognitive load for development teams. Think of it as the evolution of DevOps, focused specifically on developer experience and productivity.

But before you dismiss this as another industry buzzword, let me walk through why platform engineering might be the most ruthlessly helpful practice your organization can adopt in 2025.

The Problem: Death by a Thousand Configurations

Modern software development requires an overwhelming array of tools, services, and configurations. Check out Curtis Collicutt’s The Numerous Pains of Programming.

A typical application today might need:

- Container orchestration (Kubernetes, Docker Swarm)

- CI/CD pipelines (GitHub Actions, Jenkins, Azure DevOps)

- Monitoring and observability (Prometheus, Grafana, New Relic)

- Security scanning (Snyk, SonarQube, OWASP tools)

- Database management (multiple engines, backup strategies, migrations)

- Infrastructure as code (Terraform, ARM templates, CloudFormation)

- Service mesh configuration (Istio, Linkerd)

- Certificate management, secrets handling, networking policies…

The list goes on. Each tool solves important problems, but the cognitive load of managing them all is crushing development teams. I regularly encounter developers who spend more time configuring tools than writing business logic.

This isn’t a tools problem. This is a systems problem. Individual developers shouldn’t need to become experts in Kubernetes networking or Terraform state management to deploy a web application. That’s where platform engineering comes in.

What Platform Engineering Actually Means

Platform engineering creates an abstraction layer between developers and the underlying infrastructure complexity. Instead of each team figuring out how to deploy applications, manage databases, or set up monitoring, the platform team provides standardized, self-service capabilities.

A well-designed internal developer platform (IDP) might offer:

- Golden paths: Opinionated, well-supported ways to accomplish common tasks

- Self-service provisioning: Developers can create environments, databases, and services without tickets or waiting

- Standardized tooling: Consistent CI/CD, monitoring, and security across all applications

- Documentation and examples: Clear guidance for common scenarios and troubleshooting

The goal isn’t to eliminate flexibility—it’s to make the common cases easy and the complex cases possible.

Evaluating Platform Engineering as a Ruthlessly Helpful Practice

Let’s apply our four criteria to see if platform engineering deserves your team’s attention and investment.

1. Practicable: Is This Realistic for Your Organization?

Platform engineering is most practicable for organizations with:

- Multiple development teams (usually 3+ teams) facing similar infrastructure challenges

- Repeated patterns in application deployment, monitoring, or data management

- Engineering leadership support for investing in developer productivity

- Sufficient technical expertise to build and maintain platform capabilities

If you’re a small team with simple deployment needs, platform engineering might be overkill. But if you have multiple teams repeatedly solving the same infrastructure problems, it becomes highly practical.

Implementation Reality Check: Start small. You don’t need a comprehensive platform on day one. Begin with the most painful, repetitive task your teams face (often deployment or environment management) and build from there.

2. Generally Accepted: Is This a Proven Practice?

Platform engineering has moved well beyond the experimentation phase. Major organizations like Netflix, Spotify, Google, and Microsoft have demonstrated significant value from platform investments. The practice has enough adoption that:

- Dedicated conferences and community events focus on platform engineering

- Vendor ecosystem has emerged with tools specifically for building IDPs

- Job market shows increasing demand for platform engineers

- Academic research provides frameworks and measurement approaches

More importantly, platform engineering builds on established practices. Particularly, practices aligned to the principles of automation, standardization, and reducing manual, error-prone processes.

3. Valuable: Does This Solve Real Problems?

Platform engineering addresses several measurable problems:

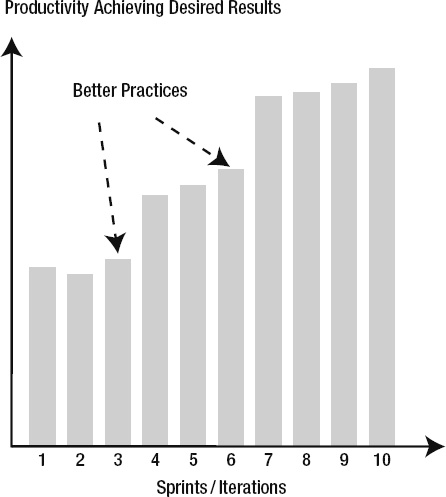

Developer Productivity: Teams report 20-40% improvements in deployment frequency and reduced time to productivity for new developers.

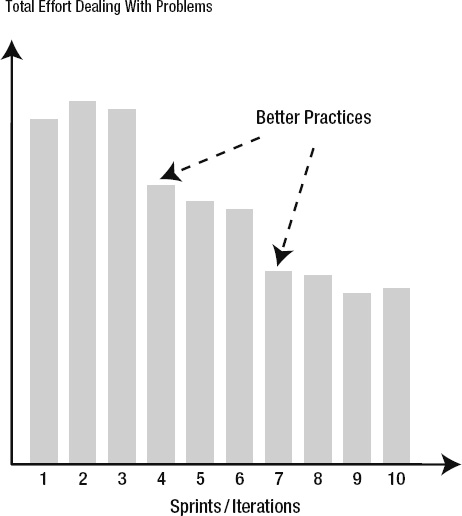

Cognitive Load Reduction: Developers can focus on business logic rather than infrastructure complexity.

Consistency and Reliability: Standardized platforms reduce environment-specific issues and improve overall system reliability.

Security and Compliance: Centralized platforms make it easier to implement and maintain security policies and compliance requirements.

Cost Optimization: Shared infrastructure and automated resource management typically reduce cloud costs.

The key is measuring these benefits in your specific context. Platform engineering is valuable when the cost of building and maintaining the platform is less than the productivity gains across all development teams.

4. Archetypal: Are There Clear Examples to Follow?

Yes, with important caveats. The platform engineering community has produced excellent resources:

- Reference architectures for common platform components

- Open source tools like Backstage, Crossplane, and Port for building IDPs

- Case studies from organizations at different scales and maturity levels

- Measurement frameworks for tracking platform adoption and effectiveness

However, every organization’s platform needs are somewhat unique. The examples provide direction, but you’ll need to adapt them to your specific technology stack, team structure, and business requirements.

Implementation Strategy: Learning from Continuous Integration

Platform engineering reminds me of continuous integration adoption patterns from the early 2000s. Teams that succeeded with CI followed a predictable pattern:

- Started with immediate pain points (broken builds, integration problems)

- Built basic automation before attempting sophisticated workflows

- Proved value incrementally rather than trying to solve everything at once

- Invested in team education alongside technical implementation

Platform engineering follows similar patterns. Here’s a practical approach:

Phase 1: Identify and Automate the Biggest Pain Point

Survey your development teams to identify their most time-consuming, repetitive infrastructure tasks. Common starting points:

- Application deployment and environment management

- Database provisioning and management

- CI/CD pipeline setup and maintenance

- Monitoring and alerting configuration

Choose one area and build a simple, self-service solution. Success here creates momentum for broader platform adoption.

Phase 2: Standardize and Document

Once you have a working solution for one problem:

- Document the standard approach with examples

- Create templates or automation for common scenarios

- Train teams on the new capabilities

- Measure adoption and gather feedback

Phase 3: Expand Based on Demonstrated Value

Use the success and lessons from Phase 1 to justify investment in additional platform capabilities. Prioritize based on team feedback and measurable impact.

Common Implementation Obstacles

Obstacle 1: “We Don’t Have Platform Engineers”

Platform engineering doesn’t require hiring a specialized team immediately. Start with existing engineers who understand your infrastructure challenges. Many successful platforms begin as side projects that demonstrate value before becoming formal initiatives.

Obstacle 2: “Our Teams Want Different Tools”

This is actually an argument for platform engineering, not against it. Provide standardized capabilities while allowing teams to use their preferred development tools on top of the platform.

Obstacle 3: “We Can’t Afford to Build This”

Calculate the total cost of infrastructure complexity across all your teams. Include developer time spent on deployment issues, environment setup, and tool maintenance. Most organizations discover they’re already paying for platform engineering, but they’re just doing it inefficiently across multiple teams.

Quantifying Platform Engineering Success

Measure platform engineering impact across three dimensions:

Developer Experience Metrics

- Time from project creation to first deployment

- Number of infrastructure-related support tickets

- Developer satisfaction surveys (quarterly)

- Time new developers need to become productive

Operational Metrics

- Deployment frequency and lead time

- Mean time to recovery from incidents

- Infrastructure costs per application or team

- Security policy compliance rates

Business Impact Metrics

- Feature delivery velocity

- Developer retention rates

- Engineering team scaling efficiency

- Customer-facing service reliability

Next Steps: Starting Your Platform Engineering Journey

If platform engineering seems like a ruthlessly helpful practice for your organization:

- Survey your teams to identify the most painful infrastructure challenges

- Calculate the current cost of infrastructure complexity across all teams

- Start small with one high-impact, well-defined problem

- Build momentum by demonstrating clear value before expanding scope

- Invest in education to help teams understand and adopt platform capabilities

Remember, the goal isn’t to build the most sophisticated platform. The goal is to build the platform that most effectively serves your teams and the business’ needs.

Commentary

When I wrote about build automation in “Pro .NET Best Practices,” I focused on eliminating manual, error-prone processes that slowed teams down. Platform engineering is the natural evolution of that thinking, applied to the entire development workflow rather than just the build process.

What’s fascinating to me is how platform engineering validates many of the strategic principles from my book. The most successful platform teams think like product teams. Platform teams understand their customers (developers), measure satisfaction and adoption, and iterate based on feedback. They focus on removing friction and enabling teams to be more effective, rather than just implementing the latest technology.

The biggest lesson I’ve learned watching organizations adopt platform engineering is that culture matters as much as technology. Platforms succeed when they’re built with developers, not for developers. The most effective platform teams spend significant time understanding developer workflows, pain points, and preferences before building solutions.

This mirrors what I observed with continuous integration adoption: technical excellence without organizational buy-in leads to unused capabilities and wasted investment. The teams that succeed with platform engineering treat it as both a technical and organizational transformation.

Looking ahead, I believe platform engineering is (or will become) as fundamental to software development as version control or automated testing. The organizations that master it early will have a significant competitive advantage in attracting talent and delivering software efficiently. Those that ignore it will find themselves increasingly hampered by infrastructure complexity as the software landscape continues to evolve.

Is your organization considering platform engineering? What infrastructure challenges are slowing down your development teams? Share your experiences and questions in the comments below.

Why “Best” Practices Aren’t Always Best

Posted by on November 6, 2025

When I titled my book Pro .NET Best Practices back in 2011, I wrestled with that word “best.” It’s a superlative that suggests there’s nothing better, no room for discussion, and no consideration of context. Over the years, I’ve watched teams struggle not because they lacked good practices, but because they blindly adopted “best practices” that weren’t right for their situation.

Today, as development teams face an overwhelming array of tools, frameworks, and methodologies, this challenge has only intensified. The industry produces new “best practices” faster than teams can evaluate them, let alone implement them effectively. It’s time we moved beyond the cult of “best” and embraced something more practical: being ruthlessly helpful.

The Problem with “Best” Practices

The software development industry loves superlatives. We have “best practices,” “cutting-edge frameworks,” and “industry-leading tools.” But here’s what I’ve learned from working with hundreds of development teams: the practice that transforms one team might completely derail another.

Consider continuous deployment—often touted as a “best practice” for modern development teams. For a team with mature automated testing, strong monitoring, and a culture of shared responsibility, continuous deployment can be transformative. But for a team still struggling with manual testing processes and unclear deployment procedures, jumping straight to continuous deployment is like trying to run before learning to walk.

The problem isn’t with the practice itself. The problem is treating any practice as universally “best” without considering context, readiness, and specific team needs.

A Better Framework: Ruthlessly Helpful Practices

Instead of asking “What are the best practices?” we should ask “What practices would be ruthlessly helpful for our team right now?” This shift in thinking changes everything about how we evaluate and adopt new approaches.

A ruthlessly helpful practice must meet four criteria:

1. Practicable (Realistic and Feasible)

The practice must be something your team can actually implement given your current constraints, skills, and organizational context. This isn’t about lowering standards—it’s about being honest about what’s achievable.

A startup with three developers has different constraints than an enterprise team with fifty. A team transitioning from waterfall to agile has different needs than one that’s been practicing DevOps for years. The most elegant practice in the world is useless if your team can’t realistically adopt it.

2. Generally Accepted and Widely Used

While innovation has its place, most teams benefit from practices that have been proven in real-world environments. Generally accepted practices come with community support, documentation, tools, and examples of both success and failure.

This doesn’t mean chasing every trend. It means choosing practices that have demonstrated value across multiple organizations and contexts, with enough adoption that you can find resources, training, and peer support.

3. Valuable (Solving Real Problems)

A ruthlessly helpful practice must address actual problems your team faces, not theoretical issues or problems you think you might have someday. The value should be measurable and connected to outcomes that matter to your stakeholders.

If your team’s biggest challenge is deployment reliability, adopting a new code review tool might be a good practice, but it’s not ruthlessly helpful right now. Focus on practices that move the needle on your most pressing challenges.

4. Archetypal (Providing Clear Examples)

The practice should come with concrete examples and implementation patterns that your team can follow. Abstract principles are useful for understanding, but teams need specific guidance to implement practices successfully.

Look for practices that include not just the “what” and “why,” but the “how”—with code examples, tool configurations, and step-by-step implementation guidance.

Applying the Framework: A Modern Example

Let’s apply this framework to a practice many teams are considering today: AI-assisted development with tools like GitHub Copilot.

Is it Practicable? For most development teams in 2025, yes. The tools are accessible, integrate with existing workflows, and don’t require massive infrastructure changes. However, teams need basic proficiency with their existing development tools and some familiarity with code review practices.

Is it Generally Accepted? Increasingly, yes. Major organizations are adopting AI assistance, there’s growing community knowledge, and the tools are becoming standard in many development environments. We’re past the experimental phase for basic AI assistance.

Is it Valuable? This depends entirely on your context. If your team spends significant time on boilerplate code, routine testing, or documentation, AI assistance can provide measurable value. If your primary challenges are architectural decisions or complex domain logic, the value may be limited.

Is it Archetypal? Yes, with caveats. There are clear patterns for effective AI tool usage, but teams need to develop their own guidelines for code review, quality assurance, and skill development in an AI-assisted environment.

For many teams today, AI-assisted development meets the criteria for a ruthlessly helpful practice. But notice how the evaluation depends on team context, current challenges, and implementation readiness.

Common Implementation Obstacles

Even when a practice meets all four criteria, teams often struggle with adoption. Here are the most common obstacles I’ve observed:

Obstacle 1: All-or-Nothing Thinking

Teams often try to implement practices perfectly from day one. This leads to overwhelm and abandonment when reality doesn’t match expectations.

Solution: Embrace incremental adoption. Start with the simplest, highest-value aspects of a practice and build competency over time. Perfect implementation is the enemy of practical progress.

Obstacle 2: Tool-First Thinking

Many teams choose tools before understanding the practice, leading to solutions that don’t fit their actual needs.

Solution: Understand the practice principles first, then evaluate tools against your specific requirements. The best tool is the one that fits your team’s context and constraints.

Obstacle 3: Ignoring Cultural Readiness

Technical practices often require cultural changes that teams underestimate or ignore entirely.

Solution: Address cultural and process changes explicitly. Plan for training, communication, and gradual behavior change alongside technical implementation.

Quantifying the Benefits

One of the most important aspects of adopting new practices is measuring their impact. Here’s how to approach measurement for ruthlessly helpful practices:

Leading Indicators

- Time invested in practice adoption and training

- Number of team members actively using the practice

- Frequency of practice application

Lagging Indicators

- Improvement in the specific problems the practice was meant to solve

- Team satisfaction and confidence with the practice

- Broader team effectiveness metrics (deployment frequency, lead time, etc.)

Example Metrics for AI-Assisted Development

- Time savings: Reduction in time spent on routine coding tasks

- Code quality: Defect rates in AI-assisted vs. manually written code

- Learning velocity: Time for new team members to become productive

- Team satisfaction: Developer experience surveys focusing on AI tool effectiveness

Next Steps: Evaluating Your Practices

Take inventory of your current development practices using the ruthlessly helpful framework:

- List your current practices: Write down the development practices your team currently follows

- Evaluate each practice: Does it meet all four criteria? Where are the gaps?

- Identify improvement opportunities: What problems does your team face that aren’t addressed by current practices?

- Research potential solutions: Find practices that specifically address your highest-priority problems

- Start small: Choose one practice that clearly meets all four criteria and begin incremental implementation

Remember, the goal isn’t to adopt the most practices or the most advanced practices. The goal is to adopt practices that make your team more effective at delivering value.

Commentary

Fourteen years after writing Pro .NET Best Practices, I’m more convinced than ever that context matters more than consensus when it comes to development practices. The “best practice” approach encourages cargo cult programming—teams adopting practices because they worked somewhere else, without understanding why or whether they fit the current situation.

The ruthlessly helpful framework isn’t just about practice selection—it’s about developing judgment. Teams that learn to evaluate practices systematically become better at adapting to change, making strategic decisions, and avoiding the latest hype cycles.

What’s changed since 2011 is the velocity of change in our industry. New frameworks, tools, and practices emerge constantly. The teams that thrive aren’t necessarily those with the best individual practices—they’re the teams that can quickly and accurately evaluate what’s worth adopting and what’s worth ignoring.

The irony is that by being more selective and contextual about practice adoption, teams often end up with better practices overall. They invest their limited time and energy in changes that actually move the needle, rather than spreading themselves thin across the latest trends.

The most successful teams I work with today have learned to be ruthlessly helpful to themselves—choosing practices that fit their context, solve their problems, and provide clear paths to implementation. It’s a more humble approach than chasing “best practices,” but it’s also more effective.

What practices is your team considering? How do they measure against the ruthlessly helpful criteria? Share your thoughts and experiences in the comments below.

Thank You AgileDC 2025

Posted by on October 28, 2025

A very big thank you to AgileDC 2025 for hosting our presentation yesterday. Fadi Stephan and I gave a talk titled, “Back to the Future – A look back at Agile Engineering Practices and their Future with AI” offered our experience and perspective on a few important questions:

- As a developer, if AI is writing the code, what’s my role?

- As a coach, are the technical practices I’ve been evangelizing for years still relevant?

- Do we still care about quality engineering?

- Do we still need to follow design best practices?

- What about techniques like Test-Driven Development (TDD) and pairing?

Agile Engineering with AI

I want to thank Fadi for co-presenting and for the hard work he put in to our discussing, debating, and deliberating on the topic of Agile Engineering with AI. Our work together continues to shape my thinking on software development. I will have a follow-on blog post that covers our presentation in depth.

If you are interested in learning about AI TDD, or building quality products with AI, or other advanced topics, then I recommend you check out the Kaizenko offerings:

I highly recommend Fadi’s coaching and training. It’s top shelf. It’s practical, hands-on, and it’s the best place to start to elevate your know-how and get to the next level.

Check out all that Fadi does here: https://www.kaizenko.com/

Coding with AI

These days I’m doing a lot of Coding with AI, which you can see on my YouTube channel, @stephenritchie4462. You’ll find various playlists of interest. For the Coding with AI playlist, I basically record myself performing an AI-assisted development task. I try ideas out in a variety ways, as a way to explore. If you are an AI skeptic, I recommend experimenting just to see how being an AI Explorer feels. I was surprised by how interesting and useful and fun coding with AI can be.

Sessions I Attended

First, I attended the keynote speech by Zuzana “Zuzi” Šochová on “Organizational Guide to Business Agility”. I enjoyed many of the ideas that Zuzi brought out:

- Start with a clear strategic purpose: Agility is how you achieve it, not why your org exists.

- Leadership is a mindset, not a title; anyone can step up, take responsibility, and model new behaviors.

- Combine adaptive governance with cultural shifts because being too rigid kills growth, and being too loose breeds chaos.

- Transformation isn’t a big bang; it’s iterative. Take tiny steps, inspect and adapt, retain a system-level awareness.

- Enable radical transparency, shared decision-making, and leader–leader dynamics to scale trust and autonomy.

Note that AgileDC is on her Top 10 Agile conferences to attend in 2025.

Then I attended the Sponsor Panel discussion on The State of Agile in the DC Region. A lot of thought provoking discussion with both a somber yet hopeful tone. The DC region is certainly undergoing changes and managing the transition will be hard.

For Session 1, I attended Industrial Driven Development (IDD) by Jim Damato and Pete Oliver-Krueger. For me industrialization is a fascinating topic. It’s about building the machine that builds the machine. In other words, manufacturing a part or product in the physical world requires engineers to build a system of machines that build the part or product. I am amazed at what their consulting work has accomplished with regard to shortening lead times.

Next, I attended Delivering value with Impact by Andrew Long. This was the most thought provoking session of the day. I particularly liked the useful metaphors on connecting Action to Customer to Behavior to Impact. There were several key insight related to using customer behavior change as a leverage point to increase the business impact your receive from your team’s actions.

After a hardy lunch and catching up with Sean George, I attended the Middle guard in Midgard… session by David Fogel. The topic is related to how the Old Guard (fixed mindset) and the New Guard (growth mindset) represent two different camps found in the Agile transformation. Midguard is the present reality. So, as an Agile Coach you’re in the present reality of working with the Middle Guard, who are a mix of both fixed mindset reservations and growth mindset desires. After the topic was introduced, it was facilitated using “Pass the cards” per Jean Tabaka (or the 35 Shuffle technique), which was a masterclass in how to use dot voting efficiently in a workshop. A lot of good knowledge sharing.

Next, I attended AI Pair Programming: Human-Centered Development in the Age of Vibe Coding by George Lively. From my software engineering perspective, what I learned here will provide the most grist for my follow-on learning and experimentation. What George showed us was his excellent experiment and the demonstration of how AI-assisted software development can both accelerate delivery and be well managed. He applied static code analysis, test code coverage, quality metrics, and DORA metrics in a way that shows how AI Pair Programming can work well.

Next was Fadi and I at the 3:15pm session. As I mentioned above, I will blog separately on our session topic.

Finally, I sat in on Richard Cheng‘s From Painful to Powerful: Sprint Planning & Sprint Review That Actually Work session. Richard has an excellent way of explaining the practical application of the Scrum Framework. He takes the concepts and framework and gives clear advice on how to improve the events, such as Sprint Planning and Sprint Review. In this session, he reminded me of some of the pitfalls that trip me up to this day; I need to stop forgetting how to avoid them. The session showcased why Richard is an excellent Certified Scrum Trainer (CST), and his training never disappoints. Check out his offerings: https://www.agilityprimesolutions.com/training

As many of you might know, Richard and I used to co-train (though I was never on an equal footing) when we both worked together at the training org that is now Sprightbulb Learning.

Stay in Touch

In addition to attending sessions and learning a lot, it was great to catch up with friends and former colleagues who attended the conference. Some I hadn’t seen in years. A big highlight of AgileDC are the connections and reconnections in the DC area’s Agile community.

You’ll find Fadi on LinkedIn here: https://www.linkedin.com/in/fadistephan/

You’ll find my LinkedIn here: https://www.linkedin.com/in/sritchie/

Take care, please stay in touch, and I hope to see you next time!